Scheduling and the long term

Over the last couple of months, we have been looking at Marketing Automation and in particular, from a Unica Campaign point of view. This time we will conclude the detailed articles by considering how we combine all the previous parts in our “Lights Out” world, to keep the lights out. This means we will be looking at scheduling and the long term, with additional steps you can take with your campaigns and sessions to make them more resilient.

We will start with the inbuilt scheduler that is part of Unica Marketing Platform. UMP is the shell that Campaign sits on top of and provides a framework for the Unica Applications including User management, Auditing and of course the Scheduler. The fact that the Scheduler is not part of Campaign becomes important later. This also supersedes the Schedule flowchart process that we only usually see used now to halt flowcharts for approval or invoke a batch trigger. One reason we do not recommend using this process for actual scheduling is because by default, a system restart will not resurrect any non-running flowcharts, and you need it to be running to start the Schedule process! The platform scheduler on the other hand, will start up with a system restart (unless you tell it otherwise)

Creating Linked Schedules

- Create you first schedule – You will need to be in flowchart view mode

- Usually you would skip past “Override Flowchart Parameters for..” but this screen can be useful if you wish to run one flowchart in multiple ways. E.g. Setting a User Variable to determine a particular region or product group and then running for multiple regions/groups

- Schedule A Run. Give the schedule a name and description (always use descriptions across the whole platform to help others and future colleagues). You can now daisy-chain schedules by creating a Schedule token for success and failure but try to make the names meaningful and fully some sort of convention, e.g. <PROCESS>_PASS and <PROCESS>_FAIL

- Set your Start Parameters:

- Now – for immediate run

- On a Date and Time – for future running

- On a trigger – here’s where we put the receiving half of the daisy-chained schedules. So if the invoking process had a token on success called MYPROC_PASS then the schedule to be triggered next in the chain should be looking for MYPROC_PASS

- On A trigger after a date – as above for the trigger but only once a date has been reached

- On completion of other tasks – this one is important as it allows you to select more than one invoking schedule so that all component parts must complete first before moving on. This allows those parts to be run in parallel. An example of this will follow.

- Set your recurrence definitions – set a repeat pattern, start and end dates etc. Note that this pattern will be invoked every time the schedule is triggered so if the calling process is itself recurring, there is usually no need to set up a pattern for the schedule subsequently called.

At this point, lets look at a typical system setup and add an idea around scheduling.

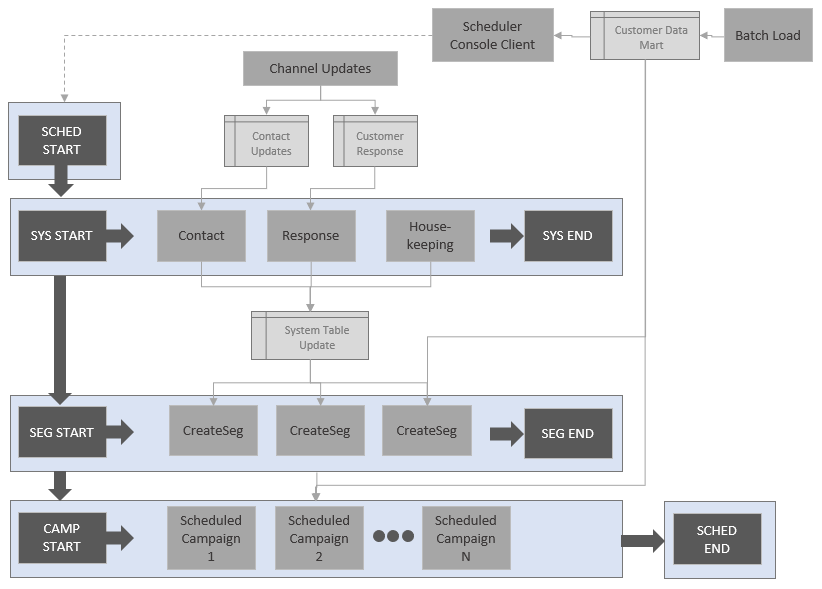

Example Schedule

In a typical production system, there are usually a couple of main inputs, the update to your main Customer Data Mart and data returned from your channel systems and partners detailing customer response to your communications. The latter should form part of some regular system processing to upload into or update your Contact and Response history although some organisations will include these updates as part of their Data Mart. Other system processes may include regular housekeeping, e.g. clearing down old Contacts to keep system runtimes to a manageable level. Once the regular system processes are completed you should then be updating your Strategic Segment definitions. Finally, all the available data (System, Data Mart and strategic segments) will be available to run your regular campaigns.

I like to create checkpoint (Session) flowcharts that do nothing other than write an entry into our system audit table as mentioned a few articles back. This means that over time you can see how long the processes between the checkpoint are taking to run as a measure of system health or a pointer to efficiency measures that might need implementing or even hardware upgrades that could be required. You can use these checkpoints as email Notification points (See below) to save on multiple messages being sent for every single scheduled component. Another reason to do this is to make the maintenance of the schedules a lot easier over time – you need only add/remove calling or called flowcharts to the checkpoints without having touch any individual flowcharts. You can do this using 4e above.

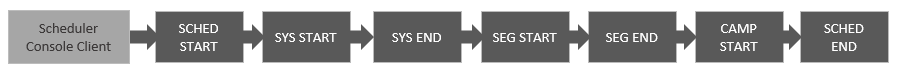

In this way, all the checkpoints will run in sequence, guaranteeing availability of required data, but within the checkpoints you can run processes, campaigns, sessions in parallel to minimise overall runtime of your daily schedule. In this way, the daily calling schedule will look like this:

At the start here, I have added scheduler console client (SCC). A lot of organisations rely on their first start point by using a timed schedule (4b + 5) but this can have issues when the Data Mart completion is delayed or has issues (because those things never happen!)

Sitting inside the Marketing Platform (Web application server directory [PLATFORM_HOME]/tools/bin) is a powerful program called Scheduler_Console_Client. You can call this program and supply a scheduler token to replicate 4c above. i.e. any schedule waiting for this token will then be run. There are two main uses for this process here:

- Work with you Customer Data Mart technical team and get them to run the program to trigger SCHED_START. The entire process will be run as soon as the dependent data is finalised, or not run if there have been issues

- Restart a checkpoint, or even the entire process in case of (partial) failure. For example, if a system issue had prevented the Strategic Segments from running but the system processes had completed you could invoke SEG_START to kick off the remaining schedules. Note – going into a schedule and flipping it to run as “Now” means you then have to go back afterwards and restore the previous definition which introduces risk.

In this example we mentioned sending email notifications for some of these checkpoints. Here’s an overview.

Scheduler Notifications Using Email

- Set up the email server configuration

- Set up notification groups if you’d like the notifications to go to more than one individual (useful during absence of administration staff)

- Subscribe to Email Notifications

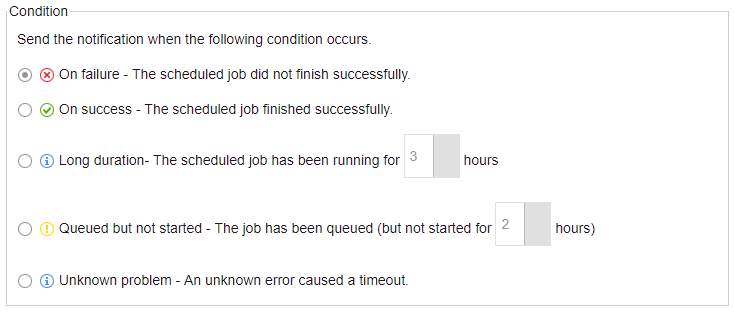

- Create the scheduler notifications for any schedule (On Failure, On Success, Long Duration, Queued not started, Unknown Problem) – Note, you will have to create the schedule first before you can go back in and “Edit Job notifications”.

It is still really useful to have an email program configured on your Campaign Analytic server that can be called via a batch trigger for events from within a flowchart. With examples given in these articles you could then flag errors and issues such as:

- Unexpected data volumes (using a custom macro to check on table sizes) – too small, too large, zero quantity

- Unexpected dates (e.g. should the maximum date in a table be as of yesterday – again a Custom Macro can get you the maximum date in a table)

Hint: A few times we have mentioned custom macros to return a value. In this case I would create a single row file containing 1 entry for customer ID, either 1 for numeric or ‘1’ for text. Then read this file and output to a strategic segment. Use this segment for input to all SQL processes that require no process box table input. You can then write your Custom Macro as “SELECT 1, {some value…}”, and assign to a UserVar via a Derived Field. A potential 5 Minute Feature – watch this space…

These notifications are important and should be checked. At one client recently, they had not noticed that their system had halted, and no schedules or campaign runs had occurred for several days!

External Schedulers

Now and again we are asked to trigger the Unica Campaigns and processes from an external scheduler such as Tivoli Workload Scheduler. We already know that we have a way of triggering Unica flowcharts externally via Scheduler_Console_Client but here we hit that problem we mentioned earlier. This program is part of Marketing Platform and not Campaign. When invoked, any responding schedules are identified and added to the scheduler Run queue.

When a schedule is started, it runs the associated object (flowchart) and provided there have been no communication issues at the system level, a status will be recorded against the run. All this information is stored in the scheduler tables in the Marketing Platform system database. One key requirement of the external scheduling is always the feedback loop so that subsequent processes can be run. Due to the disjoin between the calling process (SCC script) and the run completion of the flowchart it can be difficult to get the notification. You can look back at tables or get your flowcharts to create dummy notification files on the file system, but these require clean-up and if the scheduler is not even running, you will get nothing. In short, this is not a simple undertaking and requires careful planning and resource allocation.

Continual Running

Enough now of the scheduling itself. We have everything ticking over but there are some longer-term considerations:

- Housekeeping – data volumes will normally increase. Contact history will grow and so you should think of a longer-term strategy for archival and deletion to keep flowchart runtimes down. Flowcharts may be written to create intermediate tables or files – if possible, create a naming convention that allows you to identify old, test or obsolete data and build scripts or SQL to clear down. These can be run from batch triggers against a Schedule process (or MailList, or Flowchart Advance settings), or SQL itself as part of the advanced functionality within a Select process.

- Flowcharts may need updating. Either you can update a flowchart that’s in production but that can cause issues around testing, or you can drop in a new version. Using the scheduling checkpoints above should make this easier. Note here though that you should also consider the prod vs test run considerations we mentioned previously

- Regular Input files will build up over time

- The same is true for Intermediate files such as the data to be uploaded as part of the Unica -> Acoustic accelerator or files created as part of the bulk load scripts that are hopefully configured for your system. Ensure that the calling scripts clear down any unwanted data unless you are currently debugging system issues.

There are many more causes of system issues over time. This is one of the main reasons that Purple Square provide a comprehensive system Health Check.

So, this concludes the detailed sections of our look into Marketing Automation using IBM Campaign. Next time we will summarise the articles but for now, if you are interested in any of the subjects raised around scheduling and longer term running, including what could be part of a system Health check and how it could help your organisation, please get in touch with us.

Like what you see?

Subscribe to our newsletter for customer experience thought leadership and marketing tips and tricks.