Data Processing the ins and outs

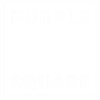

In this continuing series, we look at data processing the ins and outs, and how data gets loaded to your Unica Campaign system, how outputted and the issues you may face when trying to automate.

Unica Campaign flowcharts interface to the outside world via table “catalogs” and these connect via a mapping process to files and database tables. The data will probably be updated regularly, and usually daily. In an automated environment, you will generally have one or more of these situations:

- Database

- New data is appended to a database table (transactional)

- Old data is replaced in a table by the new data (table truncated first)

- The New data is added as a new version with a version or as-of date-stamp (old data remains)

- File

- New data is appended to a file (transactional)

- Old data is replaced in a file by the new data (file cleared first)

- A new file is created (e.g. tagged with a date-stamp)

I have only come across 1c on a couple of occasions and would always advise against it. Having to append date selection criteria to every single select process is painful and often you will just have to Extract out the current data, anyway, effectively rendering the data source irrelevant.

When the datasource is completely replaced (1b or 2b) you normally need no additional processing and can just query as is. This situation is usually representative of a Customer/Marketing Data Mart refresh.

Transactional data (1a, 2a) that is regularly appended often requires a process to identify the very latest data rows and will always require an update date in the data itself. A common example is the action table that may be generated by a channel partner that is used to update Response History via the Response process. This can be achieved in a number of ways:

- Select everything loaded since a known start time each day. This is very simple to achieve by runs the risk of missing data if the loading of the data has been delayed and this regularly seems to happen!

- Select everything but use some sort of SQL MINUS processing to extract out any data already processed. Over time this can take longer as the data sources start to accumulate history. It also leans on raw SQL which is means an additional skillset for your team and less accessibility of logic to your management.

- Select everything updated since the date of last processing. This requires that you retain the date you last ran the process and this was covered previously by use of a processing audit table. Alternatively, you could create a custom macro to read the latest update date in your output table.

Issues can arise when there are system problems. Delays in data arrival or system downtime should be catered for in your flowcharts so they can easily catch-up.

Sometimes you might want to re-run an automated flowchart against older data. This can happen if some sort of recovery has to be performed against data already loaded. User variables are a good way to easily change the effective run date of an entire flowchart. An “IF” statement in a derived field can be used to override the automated calculated value with a non-Null value in a variable say.

You should also watch out for updates to those critical data sources such as suppression and exclusion lists. It could be that all the rest of the data is updated but there has been an issue here. In these cases, you can build a decision point into your flowchart (using the previously discussed segment process). Criteria here would be that the latest update date of a particular data source is within an allowable tolerance. Again, use a Custom Macro to identify the date and load the single value into a User Variable. Then build the UserVar into the criteria to split the mutually exclusive cells of InDate, OutOfDate.

Hint: It has been mentioned a couple of times already, but due to the power of being able to parameterise a custom macro, you only need to provide one per datasource and at runtime call specifying the table and date field you wish to find the latest of e.g.

CM_Latest_Date(table, datefield)

SELECT 1,MAX(<datefield>) FROM <table>

The final category mentioned above was 2c. Here a new date-stamped file arrives every day for example. Unfortunately, although you can date-stamp a file name on the way out of Unica Campaign using a UserVar and snapshot, the same cannot be said of reading in a pattern matched filename via a catalog mapping. As the data mapping must be defined at runtime you either need to remap the file in every input cycle (defeating the Automation part of Marketing Automation) or land the data into a fixed location (table, via e.g. sqlldr or sqlcmd or to a file). This will normally require a script of some sort. Scripts can be called as part of stored triggers which in turn can be invoked from Schedule or MailList processes:

…or the Advanced settings of a flowchart (Although this would be after you need the file so would need to be part of a separate flowchart and called first!):

If you have identified that you cannot run a flowchart due to unavailability of data, you should send an email to interested parties (either an individual or a group). Again, a good way to do this is via a script from a stored trigger to call an email client on the Campaign Analytic Server. An email notification from the Marketing Platform scheduler (covered next time) may not be appropriate because you would like your flowchart to complete successfully, just not by processing any data.

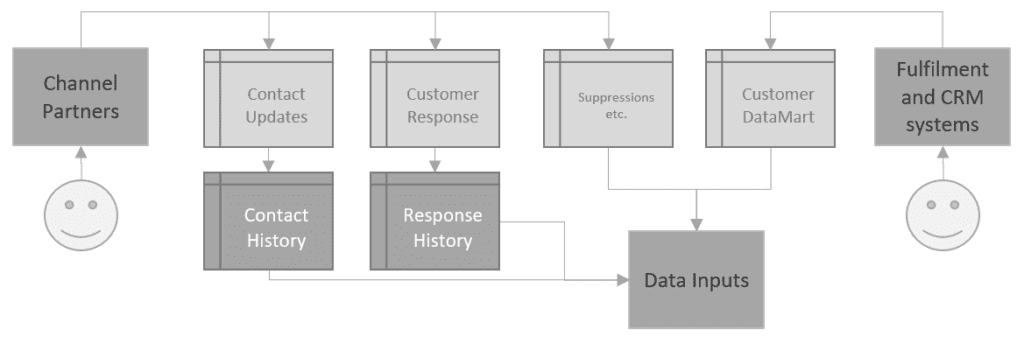

Hopefully by this stage we now have all our data inputs and are ready to run the flowchart using the building blocks we talked about in previous weeks. At the other end of the flowchart we have our outputs. These would normally be:

- Strategic segments – fixed lists of IDs for use in other flowcharts, using the CreateSeg process

- Intermediate files or tables to drive further flowcharts – Snapshot functionality to create, Catalog mappings to read in on subsequent flowcharts.

- Output files for analysis purposes – Create csv type files for loading into files to be shared via email or to tables to which you can connect your organisation’s BI software. That’s assuming of course you are not solely relying on Unica’s own Cognos component.

- Contact data for loading to Acoustic (via the accelerator), using scripts called as part of MailList or Schedule processes

- Contact data for loading to Acoustic (via the Native connectors), using the flowchart contact processes:

- Contact data for loading to another platform (e.g. via SFTP) using snapshot output followed by a batch script call to move the file to a location that can be picked up by a channel partner. You could partner this also with the email notification method above to alert the partner that a file is imminent.

Watch out for that contact data though as it will contain personal data and be subject to processing under the terms of GDPR. Be careful where it is stored or sent and who has access, and only retain for as long as necessary!

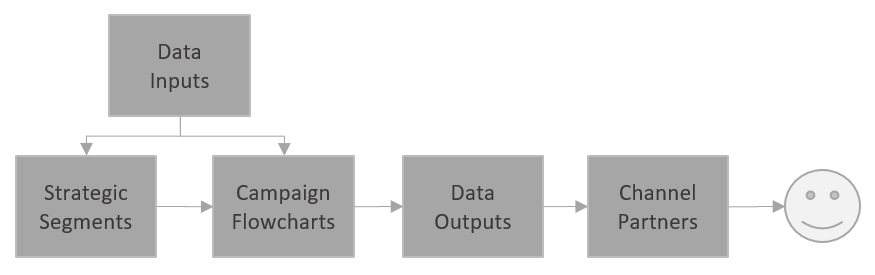

Another consideration you need to make against all these outputs is whether you are running a flowchart in a production mode or as a test. When running as a test, a MailList process will not write to contact history. It will however create output tables or write to an alternate destination as part of the MailList:

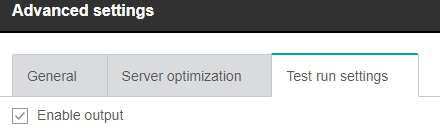

Some of this behaviour can be changed by updating the flowchart Advanced settings:

Care needs to be taken that when running an automated flowchart as a test (either through up-front design or by later enhancements), that you do not accidentally send out data to a channel. For those of a more adventurous nature, you can build a Custom Macro to read the system tables and join UA_Flowchart to UA_CCRunLog to calculate at run time whether your flowchart is running as Test or Prod (Test run of a process is not covered though). This can then be used to drive processing (Segment process as above – e.g. load the data to a different file), or output as a field to channel partners with instruction to process anything as “Test” differently.

We are nearing the end of our series of articles on Marketing Automation using Unica Campaign. Next time we will look at the actual automation part itself in terms of scheduling and longer term run considerations. We have discussed just some of the considerations around data processing here but in reality, they will be dependent on your organisation’s requirements and environment. If you wish to understand more about using Unica Campaign for Marketing automation including working with inputting and outputting data, please get in touch.

Like what you see?

Subscribe to our newsletter for customer experience thought leadership and marketing tips and tricks.